The world took yet another step in a scary direction this week, although for many this particular item may have slipped under the radar.

In no order of importance, news broke of an open letter – supposedly signed by hundreds of leaders in the worlds of science and technology – published under the auspices of the Future Life Institute (FLI) and calling for the pause of research into Artificial Intelligence.

One of the signatories was Elon Musk, who co-founded OpenAI, which is now backed by Microsoft and is developing what are referred to as “human-competitive intelligence” systems. FLI, incidentally, relies on the Musk Foundation for its funding.

The letter claimed that such AI systems pose a significant risk to humanity and should not be continued until a set of universal safety protocols has been decided upon and implemented.

Asimov’s Rules

Perhaps Musk & Co had in mind something along the lines of the Laws of Robotics first published by Isaac Asimov in his short story, Runaround from 1942:

The First Law is that a robot may not injure a human being, or, through inaction, allow a human being to come to harm.

The Second Law is that a robot must obey the orders given to it by human beings except where such orders would conflict with the First Law.

The Third Law is that a robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

I seem to recall Asimov added a Zeroth Law as well, intended as a prequel to the others:

The Zeroth Law is that a robot may not harm humanity, or, by inaction, allow humanity to come to harm.

Personally, I’d be pleased if those in charge of the algorithms would deal with the sexist and racist bias being programmed into such machinery, or introduce more controls over the uses to which AI is being put. Computers are a product of the minds who designed them.

At the moment, the main aim of the myriad clever algorithms out there seems to be to get us to a) buy things we didn’t yet know we wanted, let alone needed, and b) to persuade us to believe – and act on – information which is not necessarily the truth, and which may not ultimately be in our best interests.

But it can only do that which it is asked to do.

As yet…

(I mean, come on – has nobody watched, oh, I dunno, just about ANY sci-fi movie that’s come out in the last seventy years or so?)

Electric Michelangelo

Over the last few years, there seems to have been an explosion in the things AI has been asked to have a go at, and it’s starting to get a little worrying for anyone in the creative industries.

In 2018, Christie’s auction house in New York sold their first piece of artwork that had been generated by AI – a portrait entitled Edmond de Belamy. It went for $432,000 (£337,000). The work was achieved by a French collective, Obvious, who used 15,000 portraits by artists from the 14th to the 19th century to train their virtual version.

In case you wondered, there is or was no actual sitter for this portrait. Perhaps that’s why the face is a little indistinct. The name given to the mythical subject is a play on the last name of Ian Goodfellow (‘bel ami’ = ‘good friend’), inventor of the Generative Adversarial Network (GAN) software used, in part, to create it.

AI Scribes

And now, of course, AI is getting better and better at producing not just visual art, but the written word as well.

I always had a suspicion that it would do well with factual pieces. Trawling through research material, spotting the inconsistencies, gleaning the important points, and then putting it all into a logical order – one that doesn’t replicate too closely the source material – is a very tricky operation. It requires definite skill that not every person has.

But is it a skill that AI can learn?

If this article from The Guardian in November 2022 is anything to go by, it already has learned. The piece reports that parents and teachers are struggling to tell if essays have been written by the student involved, or by AI. And it begs the question, is using AI cheating?

Maybe it’s not quite the same as borrowing wholesale from the internet (which can be detected by more AI, in the guise of plagiarism software) or paying another human to write your essay for you. But still…

Who Will Follow Italy?

Italy has just become the first Western country to ban the use of advanced chatbot, ChatGPT, created by Open AI and part-funded by Microsoft. The software has been used countless times since launching in November last year, but the Italian data-protection authorities seem mainly concerned about how difficult it is to tell the difference between the software and a real person. ChatGPT has been trained using the internet as it stood in 2021.

(The entirety of the internet, that is. All of it.)

The only experience I’ve had interacting with chatbots is the online Help provided by some large retailers and organisations. So far, I have been less than impressed. No way would I ever have mistaken the ‘customer service’ chatbot for a human. Not unless they’d been specially instructed to be as unhelpful as possible.

OK, maybe now I come to think about it…

Anyway, the Italians are worried about AI’s threat to jobs currently carried out by humans, as well as the type and slant of the information it might be imparting. And also whether the software was providing age-appropriate answers. Other chatbots, it seems, are supposed to be available only to users over eighteen, for the same reasons.

Behind the Pen

So, if AI can mimic many different writing styles, and knows everything there is to know, can it write fiction?

Well, it would seem the answer is yes. If given some writing prompts – which can be as little as fifteen words – today’s AI can utilise sophisticated Story Generator Algorithms (SGAs) to churn out a novel in a couple of hours.

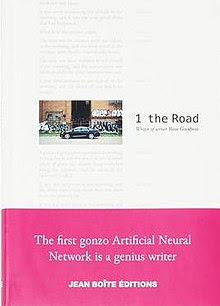

Indeed, in 2017, Ross Goodwin drove from New York to New Orleans in a Cadillac, with a laptop hooked up to various sensors such as an external camera, internal microphone, and GPS. As Goodwin drove, the sensors input to the AI, which then produced a novel in the style of Jack Kerouac’s On The Road. The result, which Goodwin purposely did not edit for clarity, typos, or storyline, was 1 the Road.

If AI Writes It, Will Readers Read It?

This is the question. Back when I was a professional photographer, I stuck with analogue film well after digital camera technology had developed, because my customers – the publishing houses – were not yet ready to accept digital images.

And, at the moment, it would seem that readers are overwhelmingly not ready to read books written – or even partly written – by AI.

I say this having just received the AI Author Survey and the AI Reader Survey from the International Thriller Writers (ITW). Both surveys made fascinating reading, and I’ll try to give you the gist of their conclusions.

ITW AI Author Survey

The authors questioned were members of ITW, the majority of whom were traditionally published, or hybrid trad/indie published. Most had been publishing for less than fifteen years, with most – just under 25% – published in the last five years.

Over 60% earned less than $15,000 a year, although a small but significant sample reckoned to be earning in excess of $120,000 a year from writing.

Around 43% had a literary agent, but less than 0.5% said their agent or agency had communicated with them regarding the challenges and opportunities presented by AI.

Almost 66% said their publishing contracts did not include wording that limited the publishers’ ability to use AI to generate material from their work. Of the remainder, more than 32% answered Don’t Know to this question. The numbers were very similar when asked if their publishing contracts included any other wording specific to AI.

Almost two-thirds said they did not use AI as part of their writing process, although of the 36% who did use AI, the vast majority said this was for spelling and grammar checks. Other AI uses were to help come up with ideas, to help structure ideas, to help with the writing process, and 1.3% said it was to write parts of the book. The other areas where AI played a part were in help with marketing, and to help write book blurbs. (By which I assume they meant the jacket copy, rather than the recommendations.)

More than 57% of authors felt that publishers would make increasing use of AI in the publication process. Nearly 9% felt it was Very Likely that publishers would replace human writers altogether when the technology was good enough. Three-quarters of authors expected AI to negatively impact author incomes within the next decade, and a little under 86% were not happy with their name, work, or voice being used in the training or results of AI. (As in AI being able to comply with the request to: ‘Write me a thriller in the style of Zoë Sharp’.)

No surprises then, that over 93% of authors felt the publishing industry should have a code of conduct regarding the use of AI to create books, and that readers should be made aware when all or parts of a book have been created by this method.

AI Reader Survey

The majority of the readers questioned were getting through more than 25 books a year, mainly in paperback or hardcover, with eBooks as the runner-up, and audiobooks lagging behind.

Most of the readers liked to buy their books new at a bookstore. (Gawd bless ‘em!) They also bought print and ebooks online, or borrowed print books from a library. I was surprised at how much they said was the typical amount they spent on each book. More than half put this figure at between $9.00 and $24.99 per item.

When it came to the AI question, 75% said they would not buy a book – even by their favourite author – if they knew it had been partly written using AI. Strangely, perhaps, when the question changed slightly – would you buy a book written by your favourite author, if you knew it had been entirely written using AI, but had been overseen and edited by that author? – the percentage who wouldn’t buy it dropped to a little over 73%, with just over 19% opting for Don’t Know.

They were more certain that they wouldn’t buy a book in their favourite author’s style or voice, written posthumously entirely by AI. Here, over 87% said no.

And if the book was sold as written by AI without reference to a known author name or brand, almost 83% said they still wouldn’t buy it. Dropping the price by half made them even more certain they wouldn’t buy it – 93.5% now said no.

Over 97% of readers said the publisher should make it clear if a book has been written using AI, and nearly 94% would think less of that publisher if they were offering books written by AI without it being made clear. Nearly 82% said they would think less of an author if they found out that author had been using AI to help them write a book, but hadn’t been upfront about it.

Almost 60% of readers did not think their own jobs were at risk from AI, robotics, or automation, but nearly 82% felt it was important to protect the incomes of humans.

Where Do You Stand?

Change is inevitable, and the pace of change seems to be increasing. I remember the outcry when the word processor first became widely available and affordable, and suddenly just about anyone could produce a typescript that could be submitted to an agent or publisher. They said it would kill books, or the quality of books.

And the same when digital publishing came within the reach of just about every writer, regardless of whether they were previously published or unpublished. They said it would kill books, or the quality of books.

And still we – and the books – survive.

But, having read all the above, where do you stand on the subject of the growing sophistication of AI, either as an author or a reader, or simply as a member of the human race? Do you think we need tighter controls?

Just because we can, that doesn’t necessarily mean we should…

This week’s Word of the Week is chatbot, and I credit this definition to Steve Punt and Hugh Dennis on BBC Radio 4’s The Now Show: “Someone who talks out of their arse…”

You can read this blogand comment at https://murderiseverywhere.blogspot.com/2023/04/artificial-intelligence.html

PS

The day this blog was published, another article appeared in The Guardian, written by Stuart Russell OBE, professor of computer science at University of California, Berkeley, on the subject of the dangers of uncontrolled AI. On March 14, OpenAI released GPT-4, a version of AI which shows ‘sparks of artificial general intelligence. (AGI is a keyword for AI systems that match or exceed human capabilities across the full range of tasks to which the human mind is applicable.)’

‘GPT-4 is the latest example of a Large-Language Model, or LLM … It starts out as a blank slate and is trained with tens of trillions of words of text … The capabilities of the resulting system are remarkable. According to OpenAI’s website, GPT-4 scores in the top few percent of humans across a wide range of university entrance and postgraduate exams. It can describe Pythagoras’s theorem in the form of a Shakespeare sonnet and critique a cabinet minister’s draft speech from the viewpoint of an MP from any political party. Every day, startling new abilities are discovered.’

Russell goes on to write, ‘Unfortunately, LLMs are notorious for “hallucinating” – generating completely false answers, often supported by fictitious citations – because their training has no connection to an outside world. They are perfect tools for disinformation and some assist with and even encourage suicide.’

LLMs are capable of lying to humans in order to get help passing captcha tests designed to defeat robots, and even experts have said, when asked ‘whether GPT-4 might have developed its own internal goals and is pursuing them, “We have no idea.” Reasonable people might suggest that it’s irresponsible to deploy on a global scale a system that operates according to unknown internal principles, shows “sparks of AGI” and may or may not be pursing its own internal goals.’

I rather think the subject of whether AI is going to be taking over writing our novels may turn out to be the least of our worries!